What are Interfaces?

- Interfaces are used in Oracle Applications to integrate external systems and Data Conversion.

- The interfaces are mainly used to either transfer data from Oracle Applications to a flat file or data from legacy system to Oracle Applications.

- Used extensively at the time of Data Conversion from legacy/ old systems to a fresh implementation of Oracle Applications.

- Used also at regular intervals when data transfer is from other live systems if the systems are not defined in Oracle Applications implementation.

- Oracle provides flexible and flexible tools in the form of Interface programs to import the master and transactional data like Customers, Invoices, and Sales Orders etc from external systems into Oracle Applications.

Types of Interfaces

There are two major types of Interfaces:

- Inbound Interface : These interfaces are used to transfer data from external systems to Oracle Applications.

- Outbound Interface : These interfaces are used to transfer data from Oracle Applications to external systems.

Two other distinctions of Interfaces:

- Open Interface: If the interface logic is provided by Oracle Applications, it is called an Open Interface.

- Custom Interface: If the interface logic needs to be developed by the implementation team, it is called a Custom Interface.

Interface Components

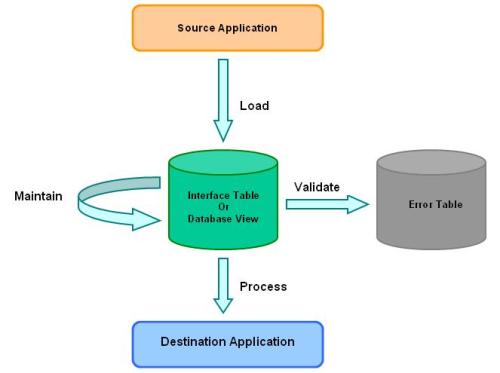

Open Interface Logic

- First the data from the source application is loaded into a database table (called Interface table).

- Then the provided validation program logic validates the records whether they are correct or not .

- If the validation fails, the errors are transferred into another table (called Error Table).

- If the validation succeeds, the correct records are transferred through a process into the destination application table.

Components of an Interface

a] Source Application:

You obtain data from a source application to pass on to a destination application for further processing and/or storage.

b] Source Data Issues:

Type of file, Size, Frequency of upload, Record Length (Variable or fixed), Delimiter, Datatype for each field, Any unwanted data, Naming convention and uniqueness of file, Location of the file, Access on the file.

c] Destination Application:

You send data to a destination application so that the application can perform further processing and/or storage.

d] Interface Table:

For inbound interfaces, the interface table is the intermediary table where the data from your source application temporarily resides until it is validated and processed into the destination application.

e] Identifier columns:

Uniquely identify rows in the interface table provide foreign key reference to both the source and destination applications.

f] Control Columns:

- Control columns track the status of each row in the interface table, as it is inserted, validated, rejected, processed, and ultimately deleted.

- WHO columns are also control columns.

g] Data Columns:

- Stores the data that is being converted.

- Required columns store the minimum information needed by the destination application to successfully process the interface row.

h] Derived Columns:

Derived columns are created by the destination application from information in the required columns.

i] Optional Columns:

Optional columns are not necessarily required by the destination application, but can be used by the destination application for additional value-added functionality beyond the basics.

j] Error Table:

- For inbound interfaces, the errors table stores all errors found by the validation and processing functions.

- In some cases, the errors table is a child of the interface table. This allows each row in the interface table to have many errors, so that you can easily manage multiple errors at once.

- In other cases, the errors are stored in a column within the interface table, which requires you to fix each error independently.

Developing an Interface

1] Identification:

Find out if there exists an Open Interface to carry out the functionality.

2] Creation of Pre-Interface table ( staging Table):

A table in the format of the data file which can be pruned to load as clean a data into the Interface table.

3] Load data into Pre-Interface table:

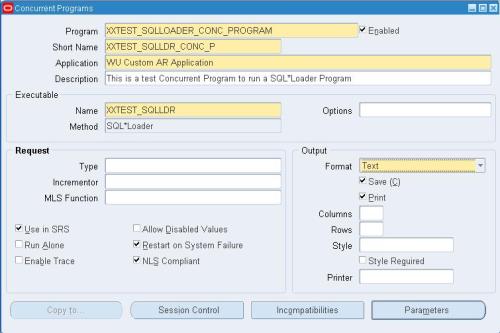

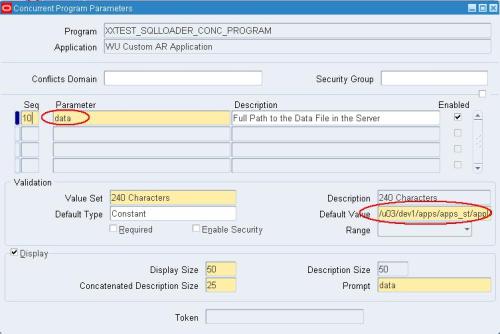

SQL*LOADER can be used to load the flat file into the pre-interface table.

4] Validate data in the Pre-Interface table:

Basic validation of the data loaded into the Pre-Interface table can be carried out like:

- For checking NULL values in required columns

- Checking for Foreign Key and Quick Code values.

- Duplication Validation

- Business Rule validation

5] Mapping the values:

Generated fields in Oracle Applications can be mapped in this step to either default values or sequences.

6] Load data into Interface table:

- Once the data is as clean as you can get it, the data can be inserted into the Interface table.

- At such a time, certain columns, which are necessary in Applications but not found in legacy system, need to be populated accordingly like WHO columns.

7] Run the interface program

8] Check for Errors

9] Report on the Interface

in a parameter file. This parameter file is executed from the command prompt. A parameter file has a .par extension. Following is a sample parameter file.

in a parameter file. This parameter file is executed from the command prompt. A parameter file has a .par extension. Following is a sample parameter file.

Recent Comments